Alan Turing was involved in some of the most important developments of the twentieth century: he invented the abstraction now called the Universal Turing Machine that every undergraduate computer science major learns in college; he was involved in the great British Enigma code-breaking effort that deserves at least some credit for the Allied victory in World War II, and last, but not the least, while working on building early digital computers post-Enigma, he described — in a fascinating philosophical paper that continues to puzzle and excite to this day — the thing we now call the Turing Test for artificial intelligence. His career was ultimately cut short, however, after he was convicted in Britain of “gross indecency” (in effect for being gay), and two years later was found dead in an apparent suicide.

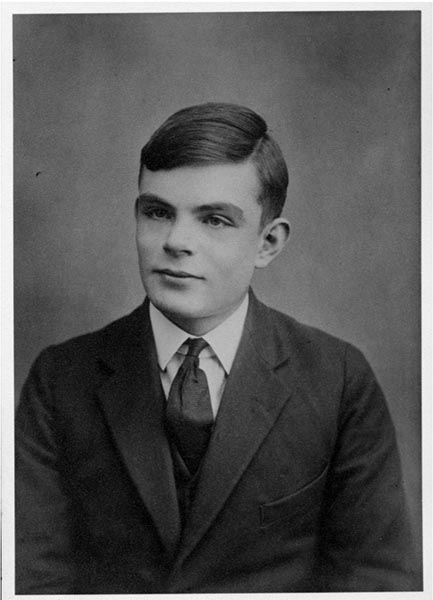

Alan Turing, circa 1927. Public domain, courtesy of The Turing Digital Archive.

The celebrations of Turing’s birth centenary began three years ago in 2012. As a result, far, far more people now know about him than perhaps ever before. 2014 was probably the climax, since nothing is as consecrating as having an A-list Hollywood movie based on your life: a film with big-name actors that garners cultural prestige, decent press, and of course, an Academy Award. I highly recommend Christian Caryl’s review of the The Imitation Game (which covers Turing’s work in breaking the Enigma code). The film is so in thrall to the Cult of the Genius that it adopts a strategy not so much of humanizing Turing or giving us a glimpse of his life, but of co-opting the audience into feeling superior to the antediluvian, backward, not to mention homophobic, Establishment (here mostly represented by Tywin Lannister, I’m sorry, Commander Denniston). Every collective achievement, every breakthrough, every strategy, is credited to Turing, and to Turing alone. One scene from the film should give you a flavor of this: as his colleagues potter around trying to work out the Enigma encryption on pieces of paper, Turing, in a separate room all by himself, is shown to be building a Bombe (a massive, complicated, machine!) alone with his bare hands armed with a screwdriver!

Image: A Turing Bombe, Bletchley Park. Source: Gerald Massey, licensed for reuse under Creative Commons BY-SA 2.0.

The movie embodies a contradiction that one can also find in Turing’s life and work. On one hand, his work was enormously influential after his death: every computer science undergrad learns about the Turing Machine, and the lifetime achievement award of the premier organization of computer scientists is called the Turing Award. But on the other, he was relatively unknown while he lived (relatively being a key word here, since he studied at Cambridge and Princeton and crossed paths with minds ranging from Wittgenstein to John Von Neumann). Perhaps in an effort to change this, the movie (like many of his recent commemorations) goes all out in the opposite direction: it credits Turing with every single collective achievement, from being responsible for the entirety of the British code-breaking effort to inventing the modern computer and computer science.

So what exactly are Turing’s achievements and how might we understand his influence? It’s interesting that this discussion has been happening, of all places, in the Association of Computing Machinery’s (ACM) prestigious monthly journal, the Communications of the ACM. Historians of computing, including Thomas Haigh and Edgar Daylight, have written three articles for the Communication’s Viewpoint column trying to clarify the outstanding historiographic issues. Did Turing invent the modern computer? Did Turing start the discipline that, today, we call “computer science”? And was he responsible for modern computing’s most ground-breaking concept: the stored-program? Short answers: no, no, and no. The longer answers are more interesting (and worth reading in the links above), but in what follows, I highlight the key tangles.

Did Turing Invent Modern Computing and/or Computer Science?

A modern rendering of the Turing Machine described in Turing’s “computable numbers” paper. Image created by GabrielF licensed for reuse under the Creative Commons Attribution-Share Alike 3.0 license. More videos can be found on the builder’s website.

Turing is acknowledged to have two formidable achievements (apart from his role in the Enigma code-breaking enterprise at Bletchley Park): the theoretical construct now known as the Turing Machine, that is today taught to all computer science undergraduates in a Theory of Computation class, and developing a theory of artificial intelligence in his famous paper “Computing Machinery and Intelligence.”

Haigh’s 2014 article focuses on the question of how much Turing’s famous paper on “computable numbers” was actually something that engineers drew on in building computing systems. (In this paper, Turing introduced his now-famous “computing machines” concept to ask whether one could deduce the provability of a statement in a formal system.) Haigh’s answer is no, there is very little evidence that the Turing Machine abstraction was relevant to the engineers immersed in the practical job of building digital computers.

As historians followed this progression of machines and ideas they found few mentions of Turing’s theoretical work in the documents produced during the 1940s by the small but growing community of computer creators. Turing is thus barely mentioned in the two main overview histories of computing published during the 1990s: Computer by Campbell-Kelly and Aspray, and A History of Modern Computing by Ceruzzi.

But this leads to another, slightly more tangled, question. The key to the modern general-purpose digital computer is something called the stored-program concept. This is the idea that the computer’s instructions for manipulating the data (aka the “program”) can be stored within the computer’s memory itself. In other words, to the computer, the data, and the instructions for manipulating the data are formally equivalent. This idea is widely credited to John Von Neumann, who introduced it in a famous report called “First draft of a report on the EDVAC” (the EDVAC being the successor to the ENIAC, widely considered to be the first digital computer). But one could argue that this is also the case with the Turing Machine: both the data and the instructions for manipulating the data are stored: the data on a tape, the instructions in a table.

The question therefore becomes: is Turing therefore the inventor of the stored-program concept? Or to put it differently: how much were John Von Neumann (and others) who were designing the EDVAC influenced by Turing’s computable numbers paper? Haigh’s answers to these questions are no, and they weren’t.

Haigh answers the first question by pointing to the utterly different styles of “On Computable Numbers” and “First Draft.” The former, he says, is a paper on and about mathematical logic.

It describes a thought experiment, like Schrödinger’s famous 1935 description of a trapped cat shifting between life and death in response to the behavior of a single atom. Schrödinger was not trying to advance the state of the art of feline euthanasia. Neither was Turing proposing the construction of a new kind of calculating machine. As the title of his paper suggested, Turing designed his ingenious imaginary machines to address a question about the fundamental limits of mathematical proof. They were structured for simplicity, and had little in common with the approaches taken by people designing actual machines.

“First Draft,” on the other hand, is about the architecture of an actual machine that was soon to be built.

It described the architecture of an actual planned computer and the technologies by which it could be realized, and was written to guide the team that had already won a contract to develop the EDVAC. Von Neumann does abstract away from details of the hardware, both to focus instead on what we would now call “architecture” and because the computer projects under way at the Moore School were still classified in 1945. His letters from that period are full of discussion of engineering details, such as sketches of particular vacuum tube models and their performance characteristics.

At this point, however, things get more complicated. Haigh agrees that Von Neumann was at least aware of Turing’s 1936 paper (Turing’s subsequent Ph.D. was at Princeton and he did interact with Von Neumann). So is “First Draft” mostly just a translation of Turing’s ideas into a more technical vocabulary, as the philosopher Jack Copeland has proposed?

Tangles of Historiography

Here we get into real historiographic debates. Haigh argues that Von Neumann did not need Turing’s paper to argue for the stored-program concept. Moreover, the point of the “First Draft” was not just to propose stored-programs on tape, which already existed at that point, but stored-programs in internal memory in addressable memory locations. (This meant that programs could modify themselves, though the “First Draft” explicitly forbade that. Turing’s paper says nothing about programs modifying themselves.)

Far more interestingly, Haigh goes on to argue that the differences between him and Copeland stem from their disciplinary perspectives: Haigh is a historian and Copeland a philosopher. Copeland’s evidence, Haigh argues, is detailed but it is often ahistorical and retrospective.

Copeland is deeply knowledgeable about computing in the 1940s, but as a philosopher approaches the topic from with a different perspective from most historians. While he provides footnotes to support these assertions they are often to interviews or other sources written many years after the events concerned. For example, the claim that Turing was interested in building an actual computer in 1936 is sourced not to any diary entry or letter from the 1930s but to the recollections of one of Turing’s former lecturers made long after real computers had been built. Like a good legal brief, his advocacy is rooted in detailed evidence but pushes the reader in one very particular direction without drawing attention to other possible interpretations less favorable to the client’s interests.

If Turing’s theoretical work in the 1930s did not actually lead to the first computers (although Turing, after the war, did build and program some of the earliest digital computers in the UK), then why is he considered the “father of computer science“? Haigh argues that the work of Michael Mahoney and Edgar Daylight might be of use here. Mahoney’s work shows that early computer scientists in the fifties tried to show that their work was a continuation of:

…earlier work from mathematical logic, formal language theory, coding theory, electrical engineering, and various other fields. Techniques and results from different scientific fields, many of which had formerly been of purely intellectual interest, were now reinterpreted within the emerging framework of computer science.

In doing this, in trying to establish that their work was not just a continuation of electrical engineering, these early computer scientists reached back and recovered Turing’s 1936 paper as a foundational text. Daylight shows that the key actors here were a group of theorists interested in machine translation, automatic translation between languages (a matter of national interest during the Cold War). These theorists, who were both applied mathematicians and computing experts, started searching for a formal theory of such translation; this allowed them to think about translation between natural languages on one hand, and between programming languages (e.g. for different computer architectures), on the other. The theoretical work of Turing, Alonzo Church, and Emil Post became key texts for these actors. This also explains why, very early on in 1965 the ACM, driven by these actors, named its premier award for lifetime achievement the “Turing Award” (now, courtesy Google, worth a cool million dollars, just like the Nobel).

The Primacy of Practice

Lest these seem to be merely historical debates about chronology, Haigh makes the point that these debates are really about the primacy of practices as opposed to ideas, to understand the efforts to build digital computers through the 1940s on their own terms, rather than as efforts to realize the Turing Machine (as George Dyson sometimes does in his 2012 book on John Von Neumann; the book is called Turing’s Cathedral although Turing doesn’t really appear in it!). Theory, Haigh argues, was not necessary to build the first digital computers, although that theory proved very useful to building the discipline called “computer science.” Ideas followed practices, and not the other way around. (Turing, to be fair, was involved in building and programming actual computers, however it’s his theoretical ideas that seem to be most valued by Copeland and others.)

Our urge to believe the computer projects of the late 1940s were driven by a desire to implement universal Turing machines is part of a broader predisposition to see theoretical computer science driving computing as a whole. If Turing invented computer science, which is itself something of an oversimplification, then surely he must have invented the computer. The computer is, in this view, just a working through of the fundamental theoretical ideas represented by a universal Turing machine in that it is universal and stores data and instructions interchangeably.

The Imitation Game ends with an almost ludicrous last line: “[Turing’s] machine was never perfected, though it generated a whole field of research into what became nicknamed “Turing Machines.” Today, we call them “computers.”” When lines like these, written by self-congratulatory screen-writers, become the general truth, the debate over the primacy of practices rather than ideas is definitely worth pursuing.

Selected References

Bullynck, Maarten, Edgar G. Daylight, and Liesbeth De Mol. 2015. “Why Did Computer Science Make a Hero out of Turing?” Communications of the ACM 58 (3): 37–39.

Daylight, Edgar G. 2014. “A Turing Tale.” Communications of the ACM 57 (10): 36–38.

Dyson, George. 2012. Turing’s Cathedral: The Origins of the Digital Universe. New York: Pantheon Books.

Haigh, Thomas. 2014. “Actually, Turing Did Not Invent the Computer.” Communications of the ACM 57 (1): 36–41.

Haigh, T., M. Priestley, and C. Rope. 2014. “Reconsidering the Stored-Program Concept.” Annals of the History of Computing, IEEE, 36 (1): pp. 4–17.

1 Comment

Great essay. It might be useful to compare this to similar cases in science history. For example Fibonacci is credited with discovering the Fibonacci sequence. But the idea was lost until its independent discovery by Johann Kepler. Gregor Mendel discovers genetics; then his work is completely ignored until the independent duplication by Hugo de Vries and Carl Correns. History is full of “original contributions” that made absolutely no contribution at all; they were simply honored in retrospect. As you point out the remarkable phenomenon to be investigated is not the original genius, but how the persistent myth of an original genius obscures the reality of a collective and historically diffuse process.

4 Trackbacks