What does it mean to trust? In this post I explore how there are specific ways of producing trust in computer science education. I draw on ethnographic fieldwork conducted for my PhD in an undergraduate computer science program in Singapore, where I examined the “making” of computer scientists—how students are shaped as socio-technical persons through computer science education. During my fieldwork, I conducted participant observation in eight undergraduate computer science courses across all years (first to fourth) with a focus on required core courses for the computer science program, which is what I draw primarily on for this post. I also conducted interviews with students, professors, and administrators; policy and curriculum analysis; and participant observation in the department, university, and tech community more generally. I also myself studied computer science as an undergraduate student, which led to my interest in this topic.

An empty computer science lab (Image by author)

I discuss here how students were taught to write “good” code and how that interrelates with producing trust (or rather distrust—more on that later). This post builds on a longer article about this topic (Breslin 2023). The trust that students learn is embedded in computer science education beyond the context of Singapore, as computing curricula, textbooks, professors, students, and a variety of other actors are internationally mobile. International (but US-centered) professional organizations such as the Association for Computing Machinery (ACM) and accreditation bodies also play a role in shaping computer science education in Singapore and elsewhere (Breslin 2018; Campbell 2017). At the same time, the form of trust cultivated in computer science education both intertwines with and differs from trust found in other communities and contexts. I discuss here, in particular, how trust in computer science education relates to forms of trust discussed by Shoshanna Zuboff (2019) in relation to “surveillance capitalism.”

Trust in Computer Science Education

In my research, I found that students learned a variety of ways to produce trust in code, but key to that trust was separation: separating social from technical, self from code, and code from materiality. Through these forms of separation, students learned to assess an ideal of code, where its trustworthiness could be evaluated through seemingly objective standards of goodness, rather than, for example, its “located accountability” where the success of a design is based on (an always partial and situated) understanding of the relation of objects with their contexts of use (Suchman 1994; 2002).

Ways of assessing code which students were taught included code’s function, aesthetics, and efficiency. Students, for example, were taught to test code and assess its functionality based on whether it passed code-based tests. This included the compilation tests, but also learning to create “unit tests,” or “assertions” and “exceptions” (particular programming constructs that handle errors). These tests focus on errors within the code itself—whether it follows proper syntax and operates as the programmer expects. Unit tests and other error handling practices often operate by separating the code into pieces to test one bit at a time, or to test the integration of pieces together.

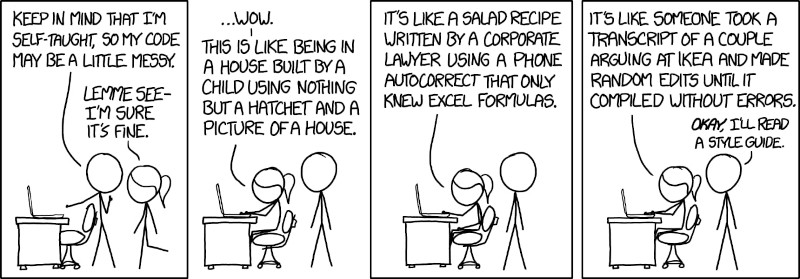

Students also learned to assess the aesthetics of code, although it was not framed as such, based on the ideal that code should look the same regardless of the person who wrote it. “Good” code was thus assessed independent from the contexts and persons of production, as well as from the contexts of its use. Students were given examples and style guides that outlined how code should look, including multiple facets of the naming of variables and other code constructs, proper indentation, proper nesting practices for code structures, commenting practices, and practices for minimizing code such as refactoring. As one course handout suggested, “it is important that the whole team/company use the same coding standard and that standard is commonly used in the industry.”

A comic about using style guides for coding (CC BY-NC 2.5 DEED by https://xkcd.com/1513/) [1]

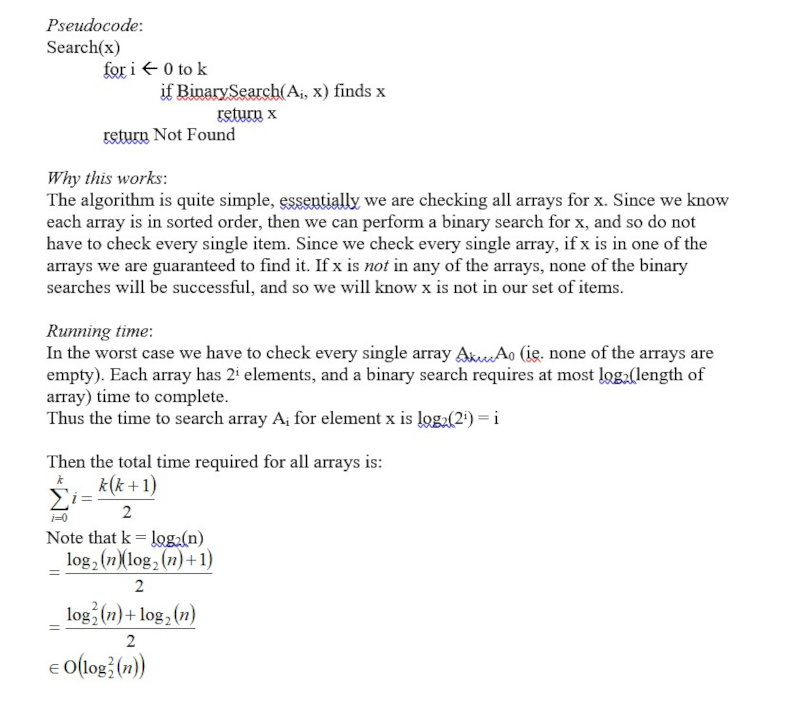

Throughout multiple courses, students were also taught how to assess code based on its efficiency, learning to evaluate the best, average, and worst-case scenarios for code and algorithms. This evaluation, however, was based on mathematical evaluations of the order of magnitude of “complexity” of an algorithm, not on how long it might run on a physical computer in practice. As part of this, code was often written as pseudocode to discuss the generalized operation of an algorithm, rather than its implementation in a particular programming language. The image below, for example, shows my own solution to an algorithms test question from my undergraduate studies, with both the pseudocode and calculation of complexity.

My solution to the design and runtime of a particular search algorithm (Image by author)

Taken together, through these means students learned to write “good”—and trustworthy—code, and become good programmers. In my article (Breslin 2023), I discuss these ways of establishing trust in code in more detail, including how these forms of trust operate alongside the cultivation of mistrust in computer science education. I ultimately argue that students learn to produce distrust rather than trust, where they have learned a system of values based on the forms of separation mentioned above for assessing good code and good coders, which enables them to withhold trust. That is, by producing a seemingly objective standard of good code, it ideally eliminates the need to trust—a practice also known as trustless trust in relation to blockchain technologies. Of course, the distinctions between trust and distrust are subtle and, in practice, closely intertwined.

I also discuss how these practices produce tensions for the students in my research as they contended with the ways they were being cultivated as homogenous and comparable programmers, where with “programmers you can always like go to India and outsource it to others” as one student highlighted (Breslin 2023: 14). Students thus felt the need to distinguish themselves, often done through demonstrating their “passion” for programming, such as through personal projects or participation in computing related clubs. These demands, however, often reproduce inequalities related to who can pursue passion and who is seen to be able to demonstrate enough passion, and they ultimately reproduce norms of overwork (Breslin 2018; Cech 2021; Darling-Wolf and Patitsas: 2024). The cultivation of dis/trust in computer science education also produces tensions with the trust implicit in an education system. Students must put some trust in their professors and educational program that what they are learning indeed cultivates them as “good” computer scientists, even as students are told to question everything, including that teachers are always right while assuming that “other coders are idiots” as one professor suggested (Breslin 2023: 1).

Cultures of Trust

Different disciplinary approaches to trust and mistrust have treated them either as an essential attitude or worldview, or as a choice and strategy for managing social relations (see Carey 2017: 3-7). Yet, trust—and how it operates—is also cultural. Along these lines, Libuše Vepřek (2024) shows how trust is produced through sociomaterial practices, emerging from the human-technology relations of the biomedical research lab that she studied. The production of dis/trust in computer science education can be seen as a sociomaterial practice produced through the interaction of values about code, the code itself (which is not a homogenous entity, but rather many layers of code languages, compilers, and libraries), computer scientists (students and professors, in my case, but also the networks of expertise they are learning to become a part of), computer science educational pedagogy and curricula, and practices of judgment that students learn to enact. While this practice is not static, it does have some stability through the establishment and standardization of computer science as a disciplinary field (e.g. Abbate 2017). In this sense, trust in computer science education is a cultural practice produced through these specific human-technology relations.

The form of dis/trust that students learn in computer science education, however, is just one cultural form of trust. Zuboff (2015; 2019), for example, discusses the trust embedded in contractual relations in contrast to the trust (or lack thereof) in surveillance capitalism where “machine processes replace human relationships so that certainty can replace trust” (Zuboff 2019: 351). Contracts, Zuboff argues, have been fundamental to society, intertwining trust with governance and the rule of law. Contracts rely on trust and are a way to mitigate uncertainty (Zuboff 2015: 81). They also build on sociomaterial assemblages that include written contracts, legal infrastructures, and much more. On the other hand, Zuboff highlights the visions and practices by tech companies for ubiquitous computer mediation in our lives where contractual relations are ensured and required through code, rather than built through trusting social relations (Zuboff 2015: 85). I suggest dis/trust in computer science both differs from and intertwines with the respective forms of trust (or lack thereof) discussed by Zuboff.

(Re)Configurations

In particular, I suggest that practices around dis/trust in computer science education prioritize “machine processes” over “human relationships,” aligning with and contributing to the (absence of) trust of surveillance capitalism. By teaching forms of trust through separation, students do not learn how to build social relations or alternative forms of trust (whether contractual trust or otherwise) as part of their education. Students are rather taught to build decontextualized and universal “solutions” for anyone and everyone around the world. This occurs as students are trained to separate technical from social, self from code, and code from materiality, and to create code that is assessed primarily in terms of emic values. Students are reproducing the same systems through which they themselves are often evaluated: machine processes produced through practices of separation, where machine processes create the order and rules of society rather than social trust and contracts, following Zuboff’s warning of surveillance capitalism.

At the same time, the forms of separation that students learn as part of computer science education, ironically, limit the possibilities for students to examine and address alternative forms of trust. Students get caught in the ways they learn to render the world into problems that they have the power to solve through writing “good” code (Breslin 2018). The worldview of computer science education is a kind of trap that is difficult to escape (Breslin 2022). And, of course, the trust of contract law that Zuboff contrasts with machine processes is only one alternative means of producing trust. Anthropologists have highlighted approaches to relations and futures that are not inherently configured by contracts (e.g. Carey 2017; Stevenson 2009). Practices in computer science education make it difficult to address these other cultures of trust.

Thinking about the traps for attention that technology experts seek as part of the development of recommender systems, Nick Seaver argues that “the question to ask of traps may not be how to escape from them, but rather how to recapture them and turn them to new ends in the service of new worlds” (Seaver 2019: 433). In a similar vein, Lucy Suchman argues that “one form of intervention into current practices of technology development, then, is through a critical consideration of how humans and machines are currently figured in those practices and how they might be figured—and configured—differently” (Suchman 2007: 227). By exploring the ways that dis/trust is produced in computer science education, I highlight the concrete practices through which students learn to write code and, ultimately, create the programs that contribute to the ecosystems of technology that we all live with. Building on the work of scholars thinking and working to build new values and practices in computer science education (e.g. Fiesler 2021; Malazita and Resetar 2019; Mayhew and Patitsas 2023; Washington et al. 2022), I do this to consider how computer science education can perhaps be (re)configured to build new worlds.

Footnotes

[1]The comic reads:

Panel 1

Two stuck figures, A and B, are standing near a desk with a computer on it. A is gesturing to the computer.

A: Keep in mind that I’m self-taught, so my code may be a little messy.

B: Lemme see- I’m sure it’s fine.

Panel 2

B sits down at the computer, their hands at the keyboard.

B: …Wow. – This is like being in a house built by a child using nothing but a hatchet and a picture of a house.

Panel 3

B: It’s like a salad recipe written by a corporate lawyer using a phone autocorrect that only knew excel formulas.

Panel 4

B: It’s like someone took a transcript of a couple arguing at Ikea and made random edits until it compiled without errors.

A: Okay, I’ll read a style guide.

Acknowledgements

Thank you to all those who contributed to my PhD research, and to the Social Sciences and Humanities Research Council of Canada and Memorial University of Newfoundland for funding and support. Thank you to the members of the Code Ethnography Collective to an insightful discussion on trust and code that helped in developing the ideas for this post. The article this post builds on is part of a forthcoming special issue on Digital Mistrust in the Journal of Cultural Economy edited by Kristoffer Albris and James Maguire.

References

Abbate, Janet. 2017. “From Handmaiden to ‘Proper Intellectual Discipline’: Creating a Scientific Identity for Computer Science in 1960s America.” In Communities of Computing: Computer Science and Society in the ACM, edited by Thomas J Misa, 25–48. New York, NY and San Rafael, CA: Association for Computing Machinery and Morgan & Claypool Publishers.

Breslin, Samantha. 2018. “The Making of Computer Scientists: Rendering Technical Knowledge, Gender, and Entrepreneurialism in Singapore.” PhD Dissertation, St. John’s, NL: Memorial University of Newfoundland.

Breslin, Samantha. 2022. “Studying Gender While ‘studying up’: On Ethnography and Epistemological Hegemony.” Anthropology in Action 29 (2): 1–10. https://doi.org/10.3167/aia.2022.290201.

Breslin, Samantha. 2023. “Computing Trust: On Writing ‘Good’ Code in Computer Science Education.” Journal of Cultural Economy. https://doi.org/10.1080/17530350.2023.2258887.

Campbell, Scott. 2017. “The Development of Computer Professionalization in Canada.” In Communities of Computing: Computer Science and Society in the ACM, edited by Thomas J Misa, 173–98. Association for Computing Machinery and Morgan & Claypool Publishers.

Carey, Matthew. 2017. Mistrust: An Ethnographic Theory. Chicago, IL: Hau Books.

Cech, Erin A. 2021. The Trouble with Passion: How Searching for Fulfillment at Work Fosters Inequality. Oakland, CA: University of California Press. https://doi.org/10.2307/j.ctv1wdvwv3.

Fiesler, Casey, Mikhaila Friske, Natalie Garrett, Felix Muzny, Jessie J. Smith, and Jason Zietz. 2021. “Integrating Ethics into Introductory Programming Classes.” In Proceedings of the 52nd ACM Technical Symposium on Computer Science Education, 1027–33. Virtual Event USA: ACM. https://doi.org/10.1145/3408877.3432510.

Darling-Wolf, Hana, and Elizabeth Patitsas. 2024. “Passion as Capital: The Cultural Production of ‘Good Computer Scientists.’” https://doi.org/10.31235/osf.io/bzthu.

Malazita, James W, and Korryn Resetar. 2019. “Infrastructures of Abstraction : How Computer Science Education Produces Anti-Political Subjects.” Digital Creativity 0 (0): 1–13. https://doi.org/10.1080/14626268.2019.1682616.

Mayhew, Eric J., and Elizabeth Patitsas. 2023. “Critical Pedagogy in Practice in the Computing Classroom.” In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1, 1076–82. Toronto ON Canada: ACM. https://doi.org/10.1145/3545945.3569840.

Seaver, Nick. 2019. “Captivating Algorithms: Recommender Systems as Traps.” Journal of Material Culture 24 (4): 421–36. https://doi.org/10.1177/1359183518820366.

Stevenson, Lisa. 2009. “The Suicidal Wound and Fieldwork among Canadian Inuit.” In Being There: The Fieldwork Encounter and the Making of Truth, edited by John Borneman and Abdellah Hammoudi, 55–76. University of California Press.

Suchman, Lucy. 1994. “Working Relations of Technology Production and Use.” Computer Supported Cooperative Work (CSCW) 2:21–39

Suchman, Lucy. 2002. “Located Accountabilities in Technology Production.” Scandinavian Journal of Information Systems 14 (2): 91–105.

Suchman, Lucy. 2007. Human-Machine Reconfigurations: Plans and Situated Actions. Cambridge; New York: Cambridge University Press.

Vepřek, Libuše Hannah. 2024. At the Edge of Artificial Intelligence. Human Computation Systems and their intraverting relations. Bielefeld: transcript (in print).

Washington, Alicia Nicki, Shaundra Daily, and Cecilé Sadler. 2022. “Identity-Inclusive Computing: Learning from the Past; Preparing for the Future.” In Proceedings of the 53rd ACM Technical Symposium on Computer Science Education V. 2, 1182. SIGCSE 2022. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3478432.3499172.

Zuboff, Shoshana. 2015. “Big Other: Surveillance Capitalism and the Prospects of an Information Civilization.” Journal of Information Technology 30 (1): 75–89. https://doi.org/10.1057/jit.2015.5.

Zuboff, Shoshana. 2019. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: PublicAffairs.