I’m a sociocultural anthropologist by training. Until recently, my research focused on environmental issues in Ecuador. Yet, my attempt to address the gaps left by traditional anthropological approaches to environmental issues quickly brought me into topical areas that the anthropology I was trained in infrequently touched on: institutional change over historical time, knowledge infrastructure work, and particularly the functioning and interaction of modern forms of expertise.

I’m now a postdoctoral fellow in medical informatics at the U.S. Veterans Affairs Administration. It is an odd organizational context to find myself in, as someone who conceived of himself as an environmental anthropologist for years. Yet many of the big themes are strikingly familiar. In particular, I am surrounded by (and participating in) the expert design of sociotechnical contexts intended to be inhabited by other experts – an aspect of environmental expertise that fascinated me in my environmentally-focused research.

In my postdoc, I’m fortunate to have exposure to many of the technical nuts and bolts of infrastructure design for clinicians. In my remarks below, I share some reflections about “clinical practice guidelines,” a specific form of formal medical guidance that increasingly constitutes part of the digital infrastructure used by medical providers, designed and implemented in part by informaticists.

***

I’m currently learning about “clinical practice guidelines” at work. Here’s what I’ve gleaned:

A clinical practice guideline (CPG) is a normative framework that contains suggestions for clinicians about how to approach specific kinds of clinical problems, and which carries a stamp of approval from professional organizations or state agencies. By their own account, CPGs exist at one location along a biomedical science pipeline that begins with evidence, transforms that evidence into prescriptions for improving human health under carefully specified conditions, and optimally transforms those norms into tools within the electronic health record to guide clinical decision-making.

Unsurprisingly, CPGs are understood to be of variable quality. They may be viewed differently depending on the existing state of the scientific literature, the methods used to review that literature, levels of stakeholder input, and the explicitness of the definitions used to define the problem and make recommendations. Meta-guidelines thus exist to help those involved in crafting CPGs, and to assist practitioners in evaluating CPGs that are handed down to them. That is, the existence of formally recognized normative frameworks eventuates disputes about their validity, which give rise to meta-normative frameworks for assessing the validity of norms.

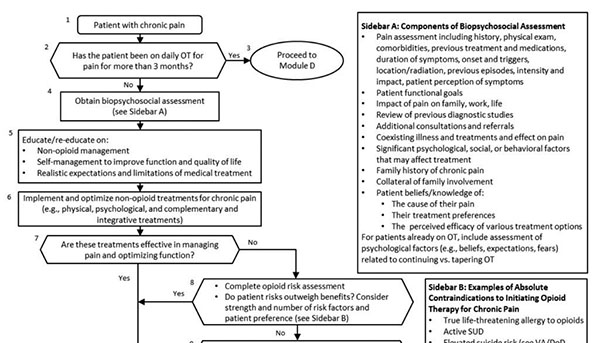

Below, you can see part of a CPG designed jointly by the U.S. Department of Veterans Affairs and Department of Defense for opioid pain treatment. The guideline itself is actually comprised of a couple hundred pages of text, but its substantive core is four “algorithms” to be executed by providers when deciding if/how to put patients on, or take patients off of opioids.

A computable norm: The VA/DoD opioid guideline

The opioid CPG could be evaluated any number of ways. One reason its designers might consider it to be a “good CPG” is the simplicity of the determinations that it asks providers to make. It contains a neat decision tree comprised of questions with simple yes/no answers. In informatics-talk it is a good CPG because it is “computable,” based on very simple Boolean logic and explicit definitions for objects. It can be handed off to programmers whose specialty is programming, not clinical decision-making; who can be counted on knowing how to code “if-then” statements, but not how to taper patients off of morphine. Because it is a computable CPG, coding it will not involve laborious deliberations about how to interpret specific branches of a decision-tree.

In other words, the expectation that the CPG’s ultimate “home” should be as an automated warning or worksheet in the electronic health record means that its designers anticipate giving it to others in a sociotechnical chain who will not be clinical experts. This anticipation conditions the CPG in advance by encouraging its formulation as a set of unambiguous formal logical statements. Infrastructure plays a meta-normative role by quietly structuring how normativity will be recognized, defined and enacted in clinical practice. For better or for worse.

***

If you’re interested in reading more musings by an environmental anthropologist stumbling through the world of medical informatics, you can find more at my personal blog, here.