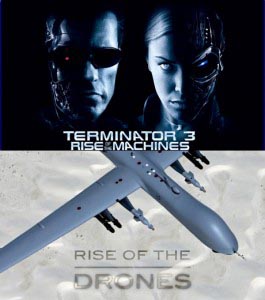

In the opening scene of the recent NOVA documentary, Rise of the Drones, the narrator ominously tells us that a revolution is underway. “Are we” he leads, “approaching a time when movies like The Terminator become our reality?” A clip from the Terminator III, with two humans cowering in fear whispering, fades in and out, “Oh God. It’s the machines. They’re starting to take over…” The narrator continues, “a time when machines fly, think, and even kill on their own?”

My dissertation research is focused on how technologies used in remote warfare are changing conceptions of warfare and experiences of agency within human-computer systems. These technologies include what the Air Force prefers to call RPAs, Remotely Piloted Aircraft, also known as UAVs, Unmanned Aerial Vehicles, and more commonly known as drones. While my fieldwork looks at individual experiences and institutional narratives within military communities, a larger backdrop of my research is popular conceptions of robotics and drones informed by mass media coverage and the long standing cultural paranoia that technological creations, once unleashed in the world, will escape human control and stop at nothing short of humankind’s destruction. In an important sense, these extreme narratives obscure more consequential and immediate discussions that should be had about robotics and artificial intelligence, and more specifically, the use of unmanned systems.

It will likely not be a surprise to CASTAC readers that popular understandings of technologies, especially in the field of robotics, are quite different from the on-the-ground reality of technological capabilities. Lucy Suchman’s blog, Robot Futures, has looked at numerous examples of such misconceptions. Nor is it unexpected that the terms “autonomous” and “unmanned,” so frequently applied to robotic technologies, are misnomers and obscure the very real human labor involved, from producing and operating hardware (from flying to interpreting data) to coding software. From Marx’s Capital to the more recent work of Shoshana Zuboff, Lucy Suchmann and many others, research has shown that advances in automation and robotics do not so much do away with the human but rather obscure the ways in which human labor and social relations are reconfigured.

And yet, the circulation of terms like autonomous and unmanned continues to frame much of the public discussion surrounding robotics in areas as diverse as the military and healthcare (see, for instance this month’s article in The Atlantic, The Robot Will See You Now). Although drones are termed “unmanned” aerial vehicles, every operation requires a team of at least three human Air Force personnel and sometimes a team of fifteen or more. (The precise nature of CIA operated drones, a different program than that of the Air Force, is not officially public.) This is in addition to those people who code the software and produce the computer and drone hardware, modes of labor that have been traditionally marginalized in conceptions of computing. And this is of course also in addition to the human lives on the ground, over which the drones fly.

The final section of the NOVA documentary looks at new sensing technologies that are being developed. The term autonomous is used numerous times and yet never defined. This is a problem because computer science understandings of autonomy differ substantially from popular understandings of the term. In the first instance, an autonomous robot indicates a sophisticated level of awareness of its surroundings and ability to react taking this awareness into account. Popular understandings of autonomous would assume that the robot could act entirely on its own and of its own accord, like Cylons or the Terminator.

Although Paul Eremenko, the Deputy Director of the Tactical Technology Office within DARPA, the Pentagon’s blue-sky research and development program, says in an interview clip, “I think if we were to ask most autonomy researchers or most AI researchers about if the “rise of the machines” type scenario is a real concern, their response would be, ‘We should be so lucky.’ In fact, if we could get little slivers of that kind of adaptive or cognitive capability that would be a very significant breakthrough over where we stand today.” Yet, the subtext of the documentary and the visual rhetoric suggest otherwise. A low-pitch sound pulses ominously throughout the documentary. Drones fly through the sky, isolated from their ties to human work and intention. (In fact, most media stories illustrate stories about drones with photographs of the machine in flight, and humans are absent from the frame.) In the final scene, the narrator says, “The ability to respond to the unknown may be the final hurdle if drones are ever able to fully replace manned planes… and start making decisions on their own.” Ominous indeed. A clip of interview with Abe Karem, the main engineer of the Predator platform, plays in response, “I think we’re far. But let me say, I’m the last guy who says impossible.” The tone of the documentary tends to push the audience toward this extreme paranoia even as the narrator reassures us that a machine still can’t do what a human can.

Although it feels like a false and placating reassurance, it is true. Humans are implicated in every moment of remote warfare. My hope is that my research can bring greater understandings to multiple communities about the social implications of remote warfare. I look forward to sharing more of my research as it continues in the coming months.

Along those lines, I also wanted to take this opportunity to let the CASTAC community know about a community that is beginning to formally coalesce, thanks to the organizing of Zoe Wool and Ken MacLeish, the Military and Security Critical Interest Group, which joins folks working ethnographically with critical conceptualizations of military life and security institutions, technologies and populations or related issues. If you’d like more information or would like to join the listserve, email MSCIGListserv@gmail.com.

I’ll leave you with a few links for further exploration:

Marcel LaFlamme, a fellow graduate student in the dissertation phase, on the emerging UAV industry in North Dakota.

Jessica Riskin’s classic essay, “Eighteenth Century Wetware” which explores the socio-historical specificity of what constitutes the imitation of life by machines.

A collaborative photo essay, “Soldier Exposure, Technical Publics” on Public Books that repositions current and historical images of wounded soldiers.