(Editor’s Note: This blog post is part of the Thematic Series Data Swarms Revisited)

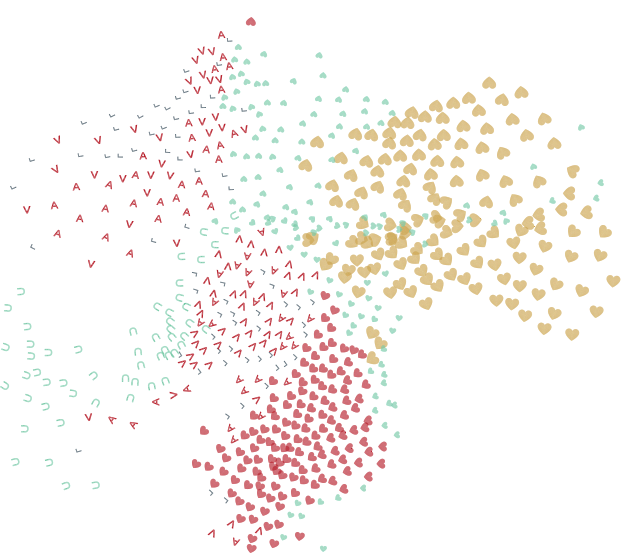

Illustration of algorithmically simulated flocking behavior (2021, created by Anna Lukina)

With the growing size of historical data available to researchers and industrial practitioners, developing algorithms for automating numerous aspects of everyday human life has become ever more dependent on data-driven techniques. Previous approaches relying on formal methods and global optimization no longer meet the increasing scalability requirements of modern applications. One of the most successful global optimization algorithms, such as Particle Swarm Optimization (PSO), continues to be employed in practice but more often as a part of more complex approaches, only being able to provide partial solutions to complex modern optimization problems. PSO was first introduced by Kennedy and Eberhart (1995) who were inspired by the most mesmerizing phenomenon in nature—bird flocking. As in any collective behavior, birds converge to an equilibrium formation that maximizes their benefits as individuals and as a society overall. V-Formation as a particular example of such formation can be formulated as an optimization problem and achieved algorithmically (e.g., Lukina et al. 2017). The birds, in this case, maximize their energy savings by taking advantage of the uplift generated under the flapping wings of the birds in front of them. The resulting emergent behavior in V-formation is to assign roles to each bird—who should lead and who should follow. The larger the flock—the higher the burden on the leader to maintain this behavior globally. It is therefore inevitable that the formation is likely to break into smaller flocks electing local leaders, which requires a more resilient solution via distributed algorithms (e.g., Tiwari et al. 2017). As the number of smaller flocks grows to become a swarm, machine-learning techniques come into play quite naturally. Had we had access to a hundred thousand observations of flocking behavior, modern autonomous systems could have learned to flock like birds. This much data and control authority, however, comes with an even bigger responsibility.

Machine-learning techniques have achieved unbeatable performance in modern applications. In particular, neural networks enable training controllers—a software managing communication between the entities of an automated system—to complete a variety of tasks without human supervision. State-of-the-art learning techniques produce neural-network models that have neither an algorithm to identify what they do not know nor the means to interact with their human user before making a decision. When deployed in the real world, such neural-network-based controllers work reliably in scenarios they have seen during training. In an unfamiliar situation, however, they can exhibit unpredictable behavior thus compromising the safety of the whole system. Levels of autonomy, which humanity and technology have been transitioning together, were recently revisited by Fisher et al. (2021). From level zero with no automation, we have strode towards assistance systems where the human remains in control. The higher up we proceed in automation the steeper the performance benefit we achieve, but the higher the risks we face. As automated technology perfects more complex tasks, more control authority is shifted from the human to the system. With the automated system given more freedom to make decisions and take action without human intervention, possible failures may result in catastrophic events before the human can detect them and take over control (Charles Isbell 2020). This risk is further intensified by the human not devoting the necessary attention to monitoring the execution of the algorithm. The reason of which could be the lack of comfortable interfacing between the two and the limited interpretability of the system’s actions. Are we ready to delegate control authority to a fully automated system?

Following the idea conveyed by Stuart Russell in their book Human Compatible: Artificial Intelligence and the Problem of Control (2019), instead of researchers striving to guarantee the safety of autonomous technologies, algorithms controlling them must be designed with safety in mind. Artificial intelligence is capable of relieving us of the burden of numerous tedious tasks. However, current approaches, despite their impressive performance, provide little insight into their decision-making, leaving us in the dark and unable to intervene. Instead, AI-based control algorithms must be designed to be “correct,” or at the very least wrapped into human-interpretable frameworks. This calls for close collaboration between human and artificial intelligence, requiring a language of interaction and algorithms to enable bidirectional learning between the two. To see the full picture, we need to go through the data pipeline systematically identifying the areas where human feedback can be leveraged most effectively.

Nine million bicycles

How much is “enough” and what is “fair” are two of the main questions occupying the minds of researchers in AI in recent years. For the algorithms to make well-weighted decisions, they need to be confident in everything they should know about the matter in question. Humans, who are sometimes unable to answer with full certainty, train these algorithms. Our best effort is therefore to supply the algorithm with all the historical data available for it to understand how we make decisions. These data, however, will date way back past the history of AI. As the norms of our society evolve and new behaviors emerge, we accumulate more recent data that is closer to our modern view on reality. Unfortunately, the amount of these data is negligibly small compared to the vast historical observations. The algorithm learns to understand the patterns that are prevalent in what it is given for training. When unattended, this might eventually train the algorithm to bias against the groups insufficiently represented in the training data (Silberg & Manyika 2019). Researchers have made considerable efforts to balance and enrich the data used for learning. Is it all about the data? The Data Nutrition Project (Holland et al. 2018), for example, aims at demystifying how AI perpetuates systemic biases from the data processing through algorithm development. For instance, when a machine-learned algorithm is deciding whom to approve a loan request, it can be biased against disadvantaged groups. Being rejected for financing, these groups will be systematically discriminated against.

Know what you do not know

As we evolve, it is only natural that algorithms evolve with us. Adaptability is the greatest strength of humanity, but this is not yet the case for artificial intelligence. After being trained once, a system is inherently insecure about the things it has never seen during training. Consequently, a member of a group novel to the trained system is likely to be associated with one of the “known” groups. Instead of consulting the human about this decision and adapting to the changed environment, the algorithm will proceed with its operation. This challenge is aggravated by the black-box nature of the decision-makers produced by machine learning. State-of-the-art logic-based formal methods that provide greater interpretability and transparency suffer from limited scalability when used in applications currently dominated by learning techniques. If the product of machine learning cannot guarantee correctness by design, it must be accompanied by a formal framework interfacing the human and leveraging their feedback to help the system learn what it does not know presently. Humans should remain the ultimate authority in making decisions suggested by the learning algorithm. This requires the algorithm to be accompanied by explanations of its actions and a humanly-interpretable interface (Henzinger, Lukina, Schilling 2020). This way allows us to learn from the automated system, whose computational capabilities are exceeding ours on certain tasks, and provide feedback more effectively. Full automation by no means implies that the human can sit back and relax, but rather, that they have acquired a capable collaborator to achieve greater heights together.

Breaking the chain

The challenges of designing reliable artificial intelligence with humans as the ultimate authority are not limited by the ones discussed in this post. Nevertheless, addressing the concerns we enumerated will be an important step towards a smoother and safer transition to full automation. Safety and fairness considerations are required at every step of the data pipeline: from data collection through algorithm design to deployment in the real world. In a highly dynamic environment, both humans and AI must continuously adapt to each other and collaboratively make robust decisions in the face of uncertainty. Unpredictable changes in the real world require algorithms to be responsible for their detection and humans to take action.

Read more of the Data Swarms Series:

Data Swarms Revisited – New Modes of Being by Christoph Lange

Angelology and Technoscience by Massimiliano Simons

Multiple Modes of Being Human by Johannes Schick

Swarming Syphilis: On the Reality of Data by Eduardo Zanella

On Drones and Ectoplasms: Breath of Gaia by Angeliki Malakasioti

Fetishes or Cyborgs? Religion as technology in the Afro-Atlantic space by Giovanna Capponi

References

Fisher, M., Mascardi, V., Rozier, K. Y., Schlingloff, B.-H., Winikoff, M., and Yorke-Smith, N. (2021). Towards a framework for certification of reliable autonomous systems. Autonomous Agents and Multi-Agent Systems, 35(1):1–65.

Henzinger, T.A., Lukina, A., and Schilling, C. (2020). Outside the box: Abstraction-based monitoring of neural networks. In Proceedings of 24th European Conference on Artificial Intelligence.

Holland, S., Hosny, A., Newman, S., Joseph, J., and Chmielinski, K. (2018). The dataset nutrition label: A framework to drive higher data quality standards. arXiv preprint arXiv:1805.03677.

Isbell, C. (2020). Invited Talk: You Can’t Escape Hyperparameters and Latent Variables: Machine Learning as a Software Engineering Enterprise. Virtual NeurIPS 2020.

Kennedy, J. and Eberhart, R. (1995). Particle swarm optimization. In Proceedings of ICNN’95-international conference on neural networks, volume 4: 1942–1948. IEEE.

Lukina, A., Esterle, L., Hirsch, C., Bartocci, E., Yang, J., Tiwari, A., Smolka, S. A., and Grosu, R. (2017). Ares: adaptive receding-horizon synthesis of optimal plans. In International Conference on Tools and Algorithms for the Construction and Analysis of Systems, pp. 286–302. Springer.

Russell, S. (2019). Human compatible: Artificial intelligence and the problem of control. Penguin.

Silberg, J. and Manyika, J. (2019). Notes from the AI frontier: Tackling bias in AI (and in humans). McKinsey Global Institute.

Tiwari, A., Smolka, S. A., Esterle, L., Lukina, A., Yang, J., and Grosu, R. (2017). Attacking the v: on the resiliency of adaptive-horizon mpc. In International Symposium on Automated Technology for Verification and Analysis, pp. 446–462. Springer.

1 Trackback