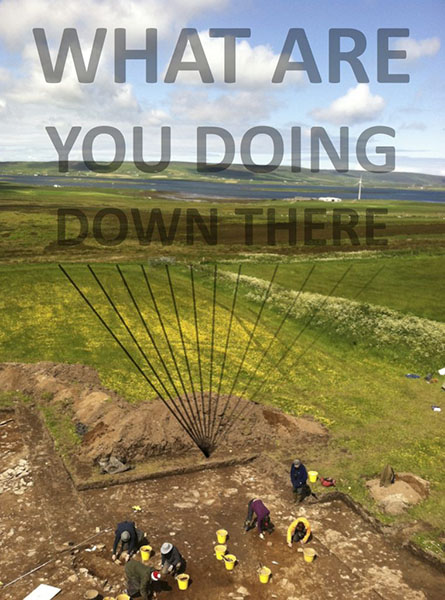

Water is, among its many attributes, fluid. Left to its own devices it runs, spills, flows, leaks, crashes, and splashes. Holding H2O still is nearly impossible above 0°C. An ambitious enough goal in water management is containment and, if lucky, control. Mastery over the whims of water is of paramount concern today across a number of socio-environmental spheres—coasts flood, deserts desiccate, Flint contaminates, and California incinerates. The various infrastructural and political hydrology problems posed by Anthropocene conditions have inspired a number of technocratic and neoliberal solutions (e.g., the $118 billion storm surge gates in New York or monetization of dehydration in Africa). A brief look at archaic relationships between water and society, however, suggests conceptual alternatives to such high-energy and high-cost survival designs. Two such examples are examined below: the gravitational plumbing at the Neolithic* site of Smerquoy in the Orkney Islands and the Persian yakhchāl, a pre-Alexandrian ‘icebox’. These (read more...)